The Rise of AI: We're in serious trouble if artificial intelligence gets any smarter

ChatGPT is fueling an AI competition that's accelerating our path toward the "singularity" - and experts warn that's bad news for humans.

Note: I wrote this because I can’t stop thinking about the future. This is a story about computers — computers that keep getting smarter because humans can't stop making them smarter. What a person could spend a lifetime mastering, these bots can do in a second. They never get tired. They don’t get complacent. They know everything about everything.

This future is already here and the consequences will be extraordinary.

This is Part I of a two-part series. Part II is here.

PDF: I made a fancy PDF version of this post if you want to print or enjoy offline. Download it here.

With the rise of OpenAI’s viral language tool, ChatGPT, artificial intelligence has entered the public conversation like never before. The competition unfolding among tech giants like Microsoft and Google will only accelerate the path toward what experts call the “singularity.”

You probably recognize the word. It describes a situation where, for better or worse, conventional thinking no longer applies. Mathematician and writer Vernor Vinge used it in a 1993 paper to describe the moment our technology’s intelligence exceeds our own. Futurist Ray Kurzweil defined the singularity as the time when the Law of Accelerating Returns — a rule that says the rate of evolutionary progress increases exponentially — reaches such an extreme that technological progress becomes effectively infinite.

Plenty still dismiss AI, and question the plausibility of a so-called singularity. In 2014, Oren Etzioni, chief executive of the Allen Institute for Artificial Intelligence, called the fear of machines a "Frankenstein complex." Other heavy-hitters have drawn parallels between AI alarmism and religious extremism, doomsday preppers, and the need to populate Mars.

Nonetheless, a growing chorus of other intellectuals, including the late Stephen Hawking, have predicted that the singularity will bring a change as dramatic as the initial emergence of human life.

The future is no longer in the future

Until recently, most of us associated AI with science-fiction. Star Wars and Star Trek. Blade Runner. 2001: A Space Odyssey. Books and movies tell us AI means robots — and they’re always presented as if they only exist in a galaxy far, far away.

But AI has little to do with robots. It’s far more ubiquitous than the current spike in enthusiasm suggests. AI’s been in regular use for years, and it certainly doesn’t need a physical body to operate. Apple’s voice assistant Siri, for example, is a form of AI. Tesla self-driving vehicles employ AI. Chess bots can beat grandmasters and IBM’s Watson wipes the floor with Jeopardy! champs.

Computer scientist John McCarthy coined the term “artificial intelligence” in 1956, but he complained that anything slapped with that label was considered experimental or impractical.

“As soon as it works, no one calls it AI anymore,” he said.

Indeed, when something new powered by AI works well, it makes a splash. But up until now even the biggest leaps become trivial with time. The internet today is more pedestrian than ingenious, and smartphones no longer make jaws drop. Even though the iPhone has more than 100,000 times the computing power than the spaceship that took Neil Armstrong to the moon, the technology’s become commonplace.

Still, Vinge forecasted that what’s going to become even more commonplace is the acceptance that AI will come to dominate its creators.

A thinking machine

In 1950, Alan Turing published a seminal paper positing that a machine can be said to “think” if it could beat his so-called imitation game, now known as the Turing Test. (Benedict Cumberbatch portrayed Turing in the 2014 film, The Imitation Game).

To pass, a computer has to converse naturally enough with someone that it could convince the test administrator that it is human, too. Only two programs have reportedly done so in seven decades. Google’s LaMDA model beat it last summer, and in 2014, a chatbot called Eugene Goostman, which simulates a 13-year-old Ukrainian boy, is said to have passed at the Royal Society in London.

The jury’s still out on whether ChatGPT has passed it but already, OpenAI is on the verge of releasing the next iteration of the language bot. Chinese tech giants Baidu and Alibaba are reportedly working on rivals, too.

Jan Szilagyi, the chief executive of financial analytics firm, Toggle AI, told me the use of AI language processors will soon become table stakes. He remains optimistic for the technology’s potential to innovate for the better. Other executives in the field have told me the same over recent weeks, though it’s typically true today that those who are more upbeat about AI tend to work in artificial intelligence.

Innovation for innovations’ sake

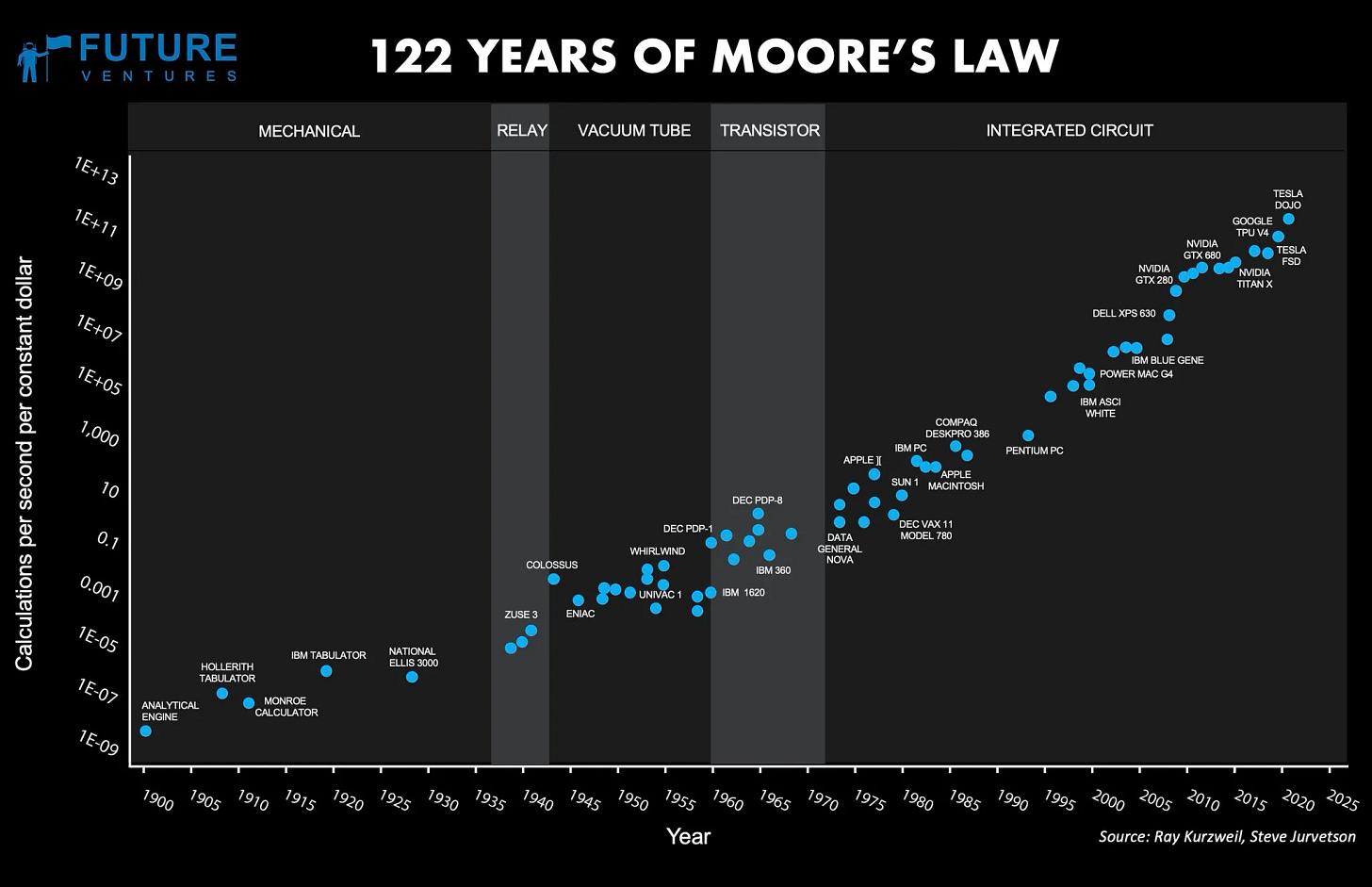

Moore’s Law states that the world’s maximum computing power doubles about every two years. That means technological advancement happens exponentially, rather than linearly, similar to the evolutionary Law of Accelerating Returns.

The rule has held true since it was first proposed by Gordon Moore in 1965. Some have forecasted it will become obsolete by around 2035 — not because we stop progressing, but because progress becomes too rapid.

As for ChatGPT, we know it can write articles, emails, and real-estate listings, among other tasks, convincingly enough that people are worried about job security. We can assume, based on Moore’s Law, that it’s going to take very little time for the bot to prove able to complete more complex work.

But AI alarmists probably didn’t have a large language model in mind when they forecasted the singularity. Moore’s Law aside, most predictions place AI at a human-level intelligence sooner than later. To extrapolate further and bet on the human tendency to innovate for the sake of innovation, it becomes inevitable.

Elon Musk, who’s company Neuralink is working toward planting chips in human brains, has warned that AI is the scariest thing civilization faces. Bill Gates similarly cautioned of the grave obstacles ahead with AI: “When people say it’s not a problem, then I really start to get to a point of disagreement. How can they not see what a huge challenge this is?”

In Vinge’s view, once we successfully invent a beyond-human-level AI, it will be the last invention humans ever create.

“For all my rampant technological optimism, sometimes I think I'd be more comfortable if I were regarding these transcendental events from one thousand years remove[d],” he said, “instead of twenty.”

The inevitable superintelligence

We’ve always operated under the correct assumption that nothing else on Earth is more intelligent than us. Yes, animals can be smart, but they’ve never posed anything close to an intellectual threat.

Not only that, but our perception of even human-level intelligence is highly distorted, as Tim Urban highlighted in 2015. We see a gap between, say, Albert Einstein and a very dumb human as a dramatic leap. But the actual discrepancy in intelligence is effectively zero, as far as computing power goes.

That’s to say we likely won’t even notice the moment AI becomes as smart as the dumbest human, because it’s going to blow past Einstein at the same time. To a machine, there’s virtually no difference between an IQ of 70 and 180.

Computer scientists denote progress for AI in two distinct ways: speed and quality. Superintelligence won't simply be a program that can process information faster than a person (we already have that). That's powerful on its own, but the separation would come from the quality of a computer's intelligence. Think about us compared to apes. If we could somehow give apes a 500-fold improvement in thinking speed, they still wouldn't demonstrate human intelligence. An ape's brain just doesn't have the processing power. Speed alone won't allow an ape to grasp the concepts humans can.

The intelligence gap between us and apes is not that big, yet only we can build governments and skyscrapers. That's what a tiny difference in intelligence quality can do. So humans, in turn, won't even be able to grasp the potential creations of a very smart AI, even if it tried to teach us about them. Oxford philosopher Nick Bostrom argued in his 2014 book Superintelligence: Paths, Dangers, Strategies, that once AI surpasses human intelligence, it will mark the dawn of a new era that would see humans getting the worst part of the deal.

A functional superintelligence, Bostrom explained, could be expected to spontaneously generate goals like self-preservation and cognitive enhancement. This is the idea of “recursive self-improvement” — when a program can make adjustments to itself to improve its own intelligence.

The trouble here is that a rapidly learning AI wouldn’t see human-level intelligence as some milestone. AI has no reason to stop progressing. Given the rate of exponential gains that technology already exhibits, any AI would likely spend only a brief time at a human-intelligence equivalent before shooting beyond into the above-human-intelligence level.

This would be an existential threat, according to Bostrom.

The odds of all this happening indeed seem like a shoe-in, but it’s going to shock the world when it happens anyway — and the realization will hit humanity like an anvil.

“Within thirty years, we will have the technological means to create superhuman intelligence,” Vinge wrote thirty years ago. “Shortly after, the human era will be ended.”

Settling for second-fiddle — or worse

In a 1965 paper, Irving John Good described the Intelligence Explosion as the moment AI reaches beyond-human intelligence. This illustrates the Law of Accelerating Returns to an extreme degree. We simply don’t have the mental framework to understand the implications.

Trying to comprehend just how smart a really smart AI could become compared to a human would be like asking a houseplant to understand Heidegger’s Being and Time. Again, if mere humans have been able to invent the Hadron Collider and wifi, think about what something 1,000 times smarter could build.

Bostrom’s concern is that we won’t have the foresight or ability to program this superintelligent thing to keep us in the loop in its decisions. At best, we could find ourselves playing a meek role of second-fiddle, almost like a pet, to superintelligent entities.

At worst, well, use your imagination.

The reality is the smartest species on Earth has always been the most powerful. Since forever, that’s been us. If we follow this line of thinking, once AI outmatch human intelligence by even a sliver, the party’s over. Our tiny brains will be outclassed on every plane of competition. In a 2015 interview, Bostrom said this technological advancement could bring annihilation. As we barrel toward an inevitability, not only is there little we can do, but there is still no way the end-game won't come as a complete surprise.

“Before the prospect of an intelligence explosion, we humans are like small children playing with a bomb,” Bostrom said. “We have little idea when the detonation will occur, though if we hold the device to our ear we can hear a faint ticking sound.”

This is Part I of a two-part series on artificial intelligence. Read Part II here.